FreeBSD Virtual Data Centre with Potluck: DevOps & Infrastructure as Code - Part I

Content

This article is split in three parts:

- Part I - Overview and Basic Setup

- Part II - Setting Up Consul, Nomad & Traefik

- Part III - Testing the Environment with Nginx & Git

Introduction

Yes, FreeBSD Lacks Kubernetes - But It Does Not Really Matter…

One of the main complaints about FreeBSD is the lack of Docker and Kubernetes, which in turn is seen as inability to use FreeBSD as a platform for bleeding edge concepts like micro services and scale-out container orchestration.

While for sure the Linux ecosystem is more diverse with a broader selection of toolstacks to choose from, this article will show that FreeBSD also contains (more than) a complete selection of tools required to run a Virtual Datacenter (vDC).

For most of the applications used here, alternatives exist in the ports and package collection as well. E.g. in addition to minio, there are more distributed storage solutions like ceph or moosefs.

Usage of Potluck

One major building block we use for quickly setting up the environment below and also for provisioning containerised services are Potluck images/flavours.

So beside showcasing the power of FreeBSD as a platform, this article series should give an idea of the concept of Potluck. While admittedly it is still in very early stages, it has the potential to be to complex container images and sets of different interdependent container images (like nomad, consul and traefik) what the FreeBSD package collection is to individual applications.

The 3 (or with storage: 4) steps in this article are enough to get a basic configuration of your vDC up and running.

Depending on your network speed, setting up the core configuration of consul, nomad and traefik in step 3 with the Potluck images will only take a few minutes.

At this point, more advanced features like high-availability configuration are not exposed by the images/flavours yet.

The plan is clearly to improve this in the coming weeks and months. Last not least, feel free to open a ticket or pull request at the github page if you come across a bug or requirement!

Architecture

Components

Our vDC is built on the following tools and applications:

- FreeBSD as base system

- FreeBSD Jails as container platform and pot as jail management tool

- minio and s3fs as distributed storage layer (optional)

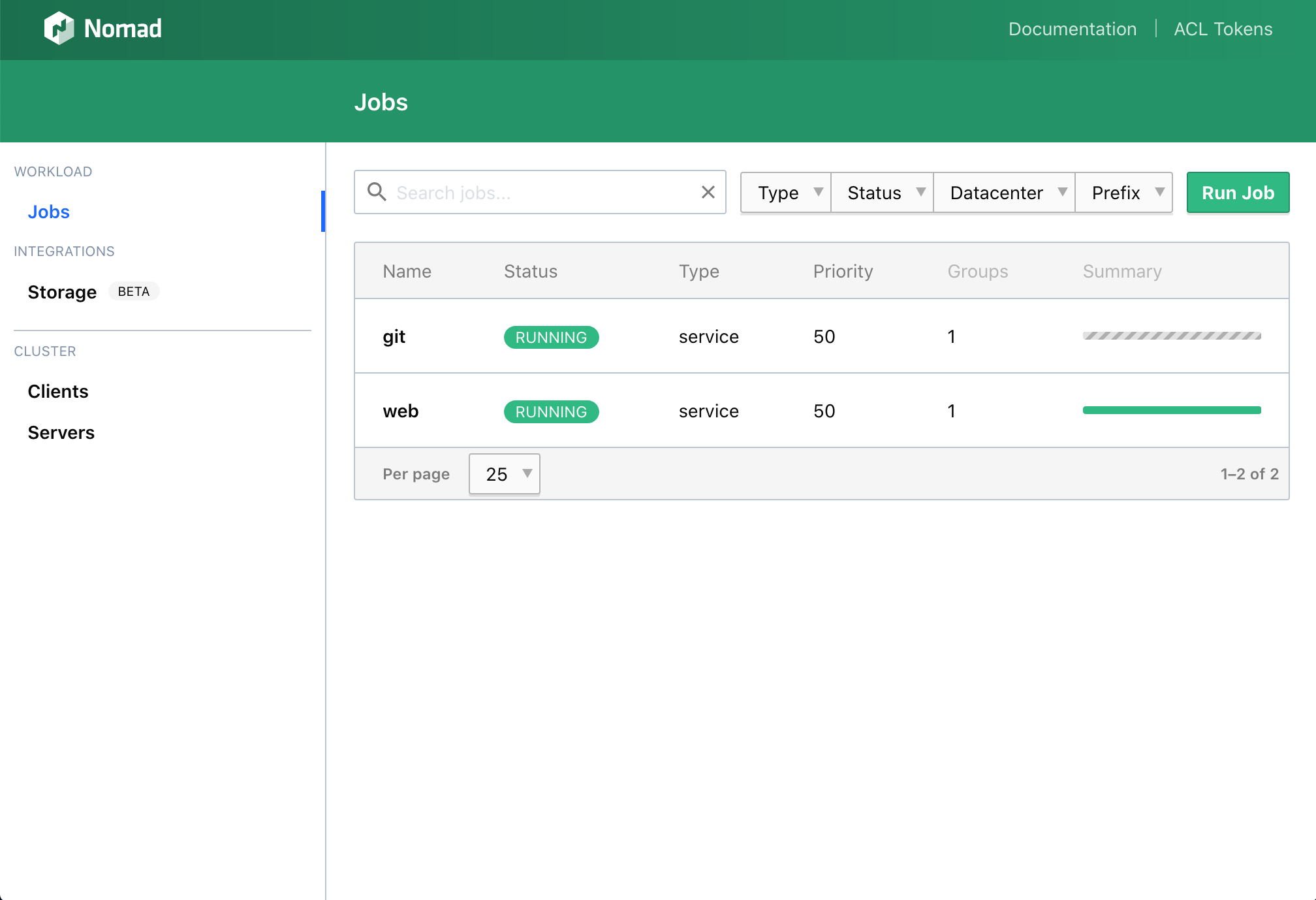

- Nomad with pot driver as service orchestration platform

- Potluck as artifactory (like Dockerhub)

- Consul as service discovery platform

- Traefik as reverse proxy

Please note that for testing pot, nomad, consul and traefik on one system, a FreeBSD package called minipot is existing that can simply be installed with pkg install minipot.

While minipot is an excellent yet simple demonstration of the basic setup, this article aims to lay the foundation for a setup somebody can transform into an actual production environment.

Systems Used

We assume you have at least two (physical or virtual) hosts in your configuration:

- Consul/Nomad/Traefik Server (here

10.10.10.10)

You could run these services on different hosts as well of course - At least one Nomad Compute Node (Client) (here

10.10.10.11)

You probably will have more than one such node in real life

Step 1 - Installing Pot

Installing pot is simple. Run the following commands on each of your hosts (10.10.10.10 and 10.10.10.11):

$ pkg install pot

...

$ echo kern.racct.enable=1 >> /boot/loader.conf

$ sysrc pot_enable="YES"

$ pot init -v

kern.racct.enable=1 is not mandatory but needed if you want to allow pot (and thus also nomad) to limit resource usage of jails.

To ensure this setting is enabled, reboot both hosts.

Step 2 - Optional: Storage Configuration

Why Network Storage?

Note: For setting up the environment described here, this chapter is optional. You can also run the two example nomad jobs below without having any network storage, since the example cluster has only one compute node anyway - or you can leave out the permanent mount-in storage for test purposes completely.

The basic idea of service orchestration is distributing services across different hosts, based on capacity demands and requirements. The containers (jails) are created on the compute node when they are started and destroyed when they are stopped.

If these services require read or potentially write access to storage that lives beyond the lifetime of the service on the host (e.g. database files, data file directories), it is necessary to mount outside storage into these containers on their initialisation.

In principle, any network file system like NFS can be used for this, just make sure the same shares are mounted in the same place on each host that potentially could be selected to run the service.

Our Setup Using minio

Since scale out storage is a good fit to a scale out compute platform, you could follow the minio setup guide to create a distributed storage cluster and simply mount the buckets on each compute node with s3fs via /etc/fstab.

If you do that, please note that due to a bug in fusefs-s3fs, you will not be able to chmod directories so they are actually readable by non-root users within the jails (e.g. by the nginx user in the example job below).

Therefore, you should mount the bucket with appropriately lax umask permissions like in this /etc/fstab entry that you need to add to the 10.10.10.11 node (assuming you otherwise followed the two articles above to set up minio):

test-bucket /mnt/s3bucket fuse rw,_netdev,uid=1001,gid=1001,allow_other,umask=002,mountprog=/usr/local/bin/s3fs,late,passwd_file=/usr/local/etc/s3fs.accesskey,url=https://10.10.10.10:9000,use_path_request_style,use_cache=/tmp/s3fs,enable_noobj_cache,no_check_certificate,use_xattr,complement_stat 0 0